Note: If you are looking for info on what to do if your Synology RAID crashes.

Before you do ANYTHING, please export a support dump.

If the volume has entered Read-Only mode, any time you reboot you lose your logs.

This may be crucial to getting your data!

Apps > Support Centre > Support Services > Log Generation > Generate Logs

TL;DR : If you want support, use genuine RAM. I paused Data Scrubbing on the array some months ago then performed an OS update, corrupting the file system.

It new years eve, Due to a recent spike of Covid-19 cases in the state we are holidaying in we have just cancelled our holiday. Needing to make a mad dash for the Victorian border with NSW before it closes at midnight. Celebrating NYE in a Macca’s (Macdonald’s) car park in Bairnsdale, we get home about 4AM

As part of being decent humans, we get tested for Covid-19 the next morning and self isolate waiting for the test results, I finally hit the hay after hours of driving and queuing.

Only to be woken by my wife at shortly after.

“Something in the Garage is Beeping”

Walking into the Garage, I see a telltale orange LED on our shiny new Synology DS920+ NAS and immediately login to its web interface.

Not degraded, not offline, CRASHED!

My heart instantly sank, my face turned white and my eldest son asked if I was going to be okay.

I genuinely didn’t know.

A quick look at the error messages and the unit was suggesting getting all the data off it ASAP, the thing was I didn’t have this data backed up, or anywhere to store it.

It should be pointed out here, that the NAS actually puts the array in read-only mode to protect your data when this occurs.

Prevention is better than cure

I can already hear you saying “A NAS isn’t backup” and that’s true. Its not

Ironically I had just purchased a larger rackmount Synology NAS (an RS2414RP+) as I was so pleased with this one that I wanted to have a separate copy of our essential data (the old 3-2-1 rule) and the production workloads in the house.

So here I was stuck with trying to fix it without losing my data, against Synology’s recommendation, I decided to have a look.

A note on supportability and RAM

I started searching online for anyone else with similar issues and found a ton of dead ends and frankly, misinformation. Including some posts that claim Synology branded RAM is blessed by Luna herself and using anything else is Heresy.

In one of my previous articles. I noted I had used a non-genuine memory module in the machine as it was listed as compatible with the CPU. Synology, however, has not validated these particular modules as compatible with this model. You should be aware this pretty much stops any support you will get from them instantly. Hence the Herecy.

So be aware, if you do decide to use non-genuine RAM. You are on your own if things go wrong.

Synology’s response

I’ll be frank. Synology’s initial response did not impress me in the slightest. A week later after I’ve calmed down. I can completely understand from a business and technical perspective why they have this stance.

I’m sure they have had their fair share of support cases taking months, only to find the RAM bit flipped something somewhere and corrupted the filesystem.

Anyway, 3 days after the case was raised with tons of troubleshooting, logs and updates from me. I got a canned response.

Dear Customer,

Thank you for contacting Synology support.

The file system errors may result from defective disks, improper shutdown and defective RAM modules. According to the system log, the disks are healthy and there was no improper shutdown when the file system errors occurred.

We notice that you added an additional RAM module and that should be the root cause of the file system errors. Please remove the additional RAM after backing up all data, recreate the volume with file system errors and then restore the data back.

If you would like to expand the RAM, please always use the compatible RAM module for DS920+:

Notice the line “and that should be the root cause of the file system errors”

No checking of anything at all, just. You have aftermarket ram. Bugger off.

Realistically, the odds of RAM causing this issue (and it didn’t) are slim but now you can see why everyone says to use genuine RAM.

My comment to this is that if RAM is that critical to file system stability. It should be ECC ram, but that would need a change of processor and Intel to actually support ECC on lower-end CPU’s like AMD do.

Naturally, I escalated even stating that the memory module had been removed, but they persisted.

They were of the opinion that as I had used non-genuine RAM, it caused the file system corruption whilst it was installed and would need a full reset before it would be supported.

Damage Assessment

First things first, what do we have and what have we lost?

A look at the Storage Pool and the Drives showed me we were in a good place, no physical damage reported. But I ran the drives through quick checks to make sure.

Jumping onto the SMB file shares, everything appears to be there, and files will copy off just fine, but I cant write to anything.

Luckily the NAS has thrown the volume into Read-Only mode to protect itself from more damage, neat.

At this point I quickly scrabbled some HDD’s together and started copying data off the NAS… (more on that later)

But why?

So the digging commences into what happened.

I went to run a data scrub on the Storage Pool and found I had manually paused it using SSH some time ago, resuming it ended with no errors reported after a few hours (in the UI… this is important)

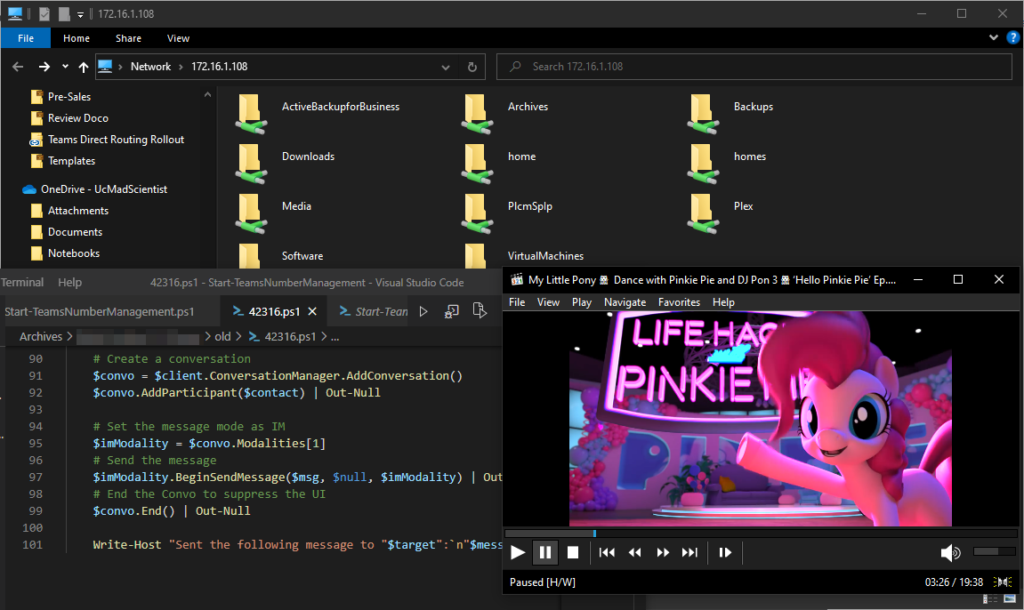

So like all good administrators, I started looking at the logs in the hope of understanding what happened.

On the Synology I headed to Apps (Main Menu) > Log Centre > Logs and took a look

The system had been up a few days without incident, other than getting a little low on space and issues sending me backup completion emails.

It also appeared to try to send me a new years celebration email (that’s my theory and I’m sticking with it) but nothing too out of the ordinary.

Before getting too into this, I should explain my setup. I’m running 4 disks in an SHR1 array (Synology’s implementation of RAID5 effectively) with a BTRFS volume sitting on top of that.

As there Was an issue with BTRFS and RAID5 that has since been patched, Synology rolled their own implementation of BTRFS. Instead of using BTRFS’s native RAID implementation, Synology uses good ol’ Linux MD RAID managed with MDADM

So next, I logged into the unit’s terminal via SSH and just ran a couple of quick sanity checking commands.

Firstly checking the status of the MD by pulling /proc/mdstat

cat /proc/mdstat

Initially, it showed the paused scrubbing, but all the partitions are there and in use as indicated by the uppercase U’s and no underscores indcating missing or failed disks.

Synology also use an “E” flag which as as far as the community can tell means the disk is in an error state.

So then I ran

sudo mdadm --detail /dev/md2

to try and get a little more info, and as far as mdadm is concerned, the raid is peachy!

So, lets take a look at /var/log/messages

2

3

4

5

6

7

8

9

10

11

12

13

2021-01-02T02:52:46+11:00 Schrodinger kernel: [ 1533.244531] BTRFS warning (device dm-1): dm-1 checksum verify failed on 6127496364032 wanted 929C1A24 found 33017964 level 129

2021-01-02T02:52:46+11:00 Schrodinger kernel: [ 1533.257284] md2: syno_self_heal_is_valid_md_stat(499): md's current state is not suitable for data correction

2021-01-02T02:52:46+11:00 Schrodinger kernel: [ 1533.268394] md2: syno_self_heal_is_valid_md_stat(499): md's current state is not suitable for data correction

2021-01-02T02:52:46+11:00 Schrodinger kernel: [ 1533.279479] md2: syno_self_heal_is_valid_md_stat(499): md's current state is not suitable for data correction

2021-01-02T02:52:46+11:00 Schrodinger kernel: [ 1533.290572] md2: syno_self_heal_is_valid_md_stat(499): md's current state is not suitable for data correction

2021-01-02T02:52:46+11:00 Schrodinger kernel: [ 1533.301865] BTRFS warning (device dm-1): dm-1 checksum verify failed on 6127496364032 wanted 929C1A24 found 33017964 level 129

2021-01-02T02:52:46+11:00 Schrodinger kernel: [ 1533.314623] md2: syno_self_heal_is_valid_md_stat(499): md's current state is not suitable for data correction

2021-01-02T02:52:46+11:00 Schrodinger kernel: [ 1533.325766] md2: syno_self_heal_is_valid_md_stat(499): md's current state is not suitable for data correction

2021-01-02T02:52:46+11:00 Schrodinger kernel: [ 1533.336896] md2: syno_self_heal_is_valid_md_stat(499): md's current state is not suitable for data correction

2021-01-02T02:52:46+11:00 Schrodinger kernel: [ 1533.347995] md2: syno_self_heal_is_valid_md_stat(499): md's current state is not suitable for data correction

2021-01-02T02:52:46+11:00 Schrodinger kernel: [ 1533.359255] BTRFS warning (device dm-1): dm-1 checksum verify failed on 6127496364032 wanted 929C1A24 found 33017964 level 129

2021-01-02T02:52:46+11:00 Schrodinger kernel: [ 1533.371990] BTRFS error (device dm-1): BTRFS: dm-1 failed to repair btree csum error on 6127496364032, mirror = 1

Well, that doesn’t look like “No errors” now does it!

What’s happening here is that MD has requested BTRFS run a resync of the RAID array using its metadata, but that’s failing as the OS has put the FileSystem into Read-Only mode to protect it.

Eventually, I took a support report, raised a ticket with Synology (see above), and started looking through the log files in the support debug file (you can open it with a zip utility) and sure enough, I can see BTFRS errors just before the system determined the volume had crashed, placed it in read-only mode at 8:24 PM on 01-01-2021, and sent me an email.

021-01-01T20:24:54+11:00 Schrodinger kernel: [397675.432155] BTRFS error (device dm-1): BTRFS: dm-1 failed to repair btree csum error on 6127496364032, mirror = 1

2021-01-01T20:24:54+11:00 Schrodinger kernel: [397675.432155]

2021-01-01T20:24:54+11:00 Schrodinger kernel: [397675.488365] BTRFS warning (device dm-1): dm-1 checksum verify failed on 6127496364032 wanted 929C1A24 found 33017964 level 129

2021-01-01T20:24:54+11:00 Schrodinger kernel: [397675.501691] BTRFS warning (device dm-1): dm-1 checksum verify failed on 6127496364032 wanted 929C1A24 found 33017964 level 129

2021-01-01T20:24:54+11:00 Schrodinger kernel: [397675.514869] BTRFS warning (device dm-1): dm-1 checksum verify failed on 6127496364032 wanted 929C1A24 found 33017964 level 129

2021-01-01T20:24:54+11:00 Schrodinger kernel: [397675.527975] BTRFS warning (device dm-1): dm-1 checksum verify failed on 6127496364032 wanted 929C1A24 found 33017964 level 129

2021-01-01T20:24:54+11:00 Schrodinger kernel: [397675.541064] BTRFS warning (device dm-1): dm-1 checksum verify failed on 6127496364032 wanted 929C1A24 found 33017964 level 129

2021-01-01T20:24:54+11:00 Schrodinger kernel: [397675.554139] BTRFS warning (device dm-1): dm-1 checksum verify failed on 6127496364032 wanted 929C1A24 found 33017964 level 129

2021-01-01T20:24:54+11:00 Schrodinger kernel: [397675.567214] BTRFS warning (device dm-1): dm-1 checksum verify failed on 6127496364032 wanted 929C1A24 found 33017964 level 129

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.374608] BTRFS error (device dm-1): BTRFS: dm-1 failed to repair btree csum error on 6127496364032, mirror = 2

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.374608]

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.391087] ------------[ cut here ]------------

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.391305] BTRFS: error (device dm-1) in __btrfs_free_extent:7191: errno=-5 IO failure

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.391311] BTRFS: error (device dm-1) in btrfs_run_delayed_refs:3221: errno=-5 IO failure

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.420550] WARNING: CPU: 1 PID: 19330 at fs/btrfs/extent-tree.c:7191 __btrfs_free_extent+0x1a6/0xf40 [btrfs]()

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.432016] Modules linked in: xt_ipvs ip_vs_rr ip_vs xt_mark iptable_mangle br_netfilter bridge stp aufs macvlan veth xt_conntrack xt_addrtype nf_conntrack_ipv6 nf_defrag_ipv6 ip6table_filter ip6_tables ipt_MASQUERADE xt_REDIRECT nf_nat_masquerade_ipv4 xt_nat iptable_nat nf_nat_ipv4 nf_nat_redirect nf_nat xt_recent xt_iprange xt_limit xt_state xt_tcpudp xt_multiport xt_LOG nf_conntrack_ipv4 nf_defrag_ipv4 iptable_filter ip_tables cifs udf isofs loop tcm_loop(O) iscsi_target_mod(O) syno_hddmon(P) target_core_ep(O) target_core_multi_file(O) target_core_file(O) target_core_iblock(O) target_core_mod(O) syno_extent_pool(PO) rodsp_ep(O) hid_generic usbhid hid usblp usb_storage uhci_hcd ehci_pci ehci_hcd nf_conntrack x_tables bonding geminilake_synobios(PO) leds_lp3943 exfat(O) btrfs i915 drm_kms_helper syscopyarea sysfillrect sysimgblt fb_sys_fops cfbfillrect cfbcopyarea cfbimgblt drm drm_panel_orientation_quirks iosf_mbi fb fbdev video backlight button synoacl_vfs(PO) hfsplus md4 hmac r8168(O) i2c_algo_bit vxlan ip6_udp_tunnel udp_tunnel fuse vfat fat crc32c_intel aesni_intel glue_helper lrw gf128mul ablk_helper arc4 cryptd ecryptfs sha256_generic ecb aes_x86_64 authenc des_generic ansi_cprng cts md5 cbc cpufreq_powersave cpufreq_performance acpi_cpufreq processor cpufreq_stats dm_snapshot dm_bufio crc_itu_t crc_ccitt quota_v2 quota_tree psnap p8022 llc sit tunnel4 ip_tunnel ipv6 zram sg etxhci_hcd xhci_pci xhci_hcd usbcore usb_common [last unloaded: ip_tables]

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.577566] CPU: 0 PID: 19330 Comm: kworker/u8:8 Tainted: P O 4.4.59+ #25426

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.586798] Hardware name: Synology DS920+/DS920+, BIOS M.410.00 03/23/2020

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.594689] Workqueue: events_unbound __btrfs_async_run_delayed_refs [btrfs]

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.602663] 0000000000000000 ffff880215a2fad8 ffffffff812ae28b ffff880215a2fb20

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.611032] ffffffffa0616c7a ffff880215a2fb10 ffffffff81047c5c 00000471e7710000

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.619407] 0000000000000000 ffff880362f38000 00000000fffffffb ffff880365d44a80

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.627780] Call Trace:

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.630605] [<ffffffff812ae28b>] dump_stack+0x4d/0x72

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.636440] [<ffffffff81047c5c>] warn_slowpath_common+0x7c/0xb0

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.643244] [<ffffffff81047cd7>] warn_slowpath_fmt+0x47/0x50

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.649769] [<ffffffffa056a526>] __btrfs_free_extent+0x1a6/0xf40 [btrfs]

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.657450] [<ffffffff8105bef9>] ? insert_work+0x69/0xb0

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.663585] [<ffffffffa056e88c>] __btrfs_run_delayed_refs+0x6dc/0x10f0 [btrfs]

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.671848] [<ffffffff8107c56f>] ? __wake_up+0x3f/0x50

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.677790] [<ffffffffa059380f>] ? btrfs_finish_ordered_io+0x1df/0x660 [btrfs]

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.686060] [<ffffffffa0572ef5>] btrfs_run_delayed_refs+0xc5/0x310 [btrfs]

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.693940] [<ffffffffa0573207>] __btrfs_async_run_delayed_refs+0x37/0x50 [btrfs]

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.702485] [<ffffffff8105d00f>] process_one_work+0x13f/0x360

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.709098] [<ffffffff8105d6c6>] worker_thread+0x46/0x440

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.715321] [<ffffffff8105d680>] ? max_active_store+0x60/0x60

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.721933] [<ffffffff81062194>] kthread+0xc4/0xe0

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.727475] [<ffffffff810620d0>] ? kthread_create_on_node+0x170/0x170

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.734860] [<ffffffff8156843f>] ret_from_fork+0x3f/0x80

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.740987] [<ffffffff810620d0>] ? kthread_create_on_node+0x170/0x170

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.748468] ---[ end trace bbfb2895fbcd5cb8 ]---

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.753805] BTRFS: error (device dm-1) in __btrfs_free_extent:7191: errno=-5 IO failure

2021-01-01T20:24:55+11:00 Schrodinger kernel: [397676.762871] BTRFS: error (device dm-1) in btrfs_run_delayed_refs:3221: errno=-5 IO failure

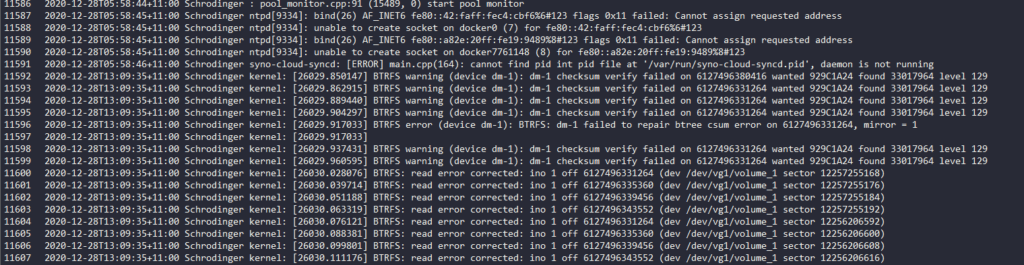

Scrolling back, I can see these read errors had been happening for a while.

2020-12-28T13:09:35+11:00 Schrodinger kernel: [26029.850147] BTRFS warning (device dm-1): dm-1 checksum verify failed on 6127496380416 wanted 929C1A24 found 33017964 level 129

2020-12-28T13:09:35+11:00 Schrodinger kernel: [26029.862915] BTRFS warning (device dm-1): dm-1 checksum verify failed on 6127496331264 wanted 929C1A24 found 33017964 level 129

2020-12-28T13:09:35+11:00 Schrodinger kernel: [26029.889440] BTRFS warning (device dm-1): dm-1 checksum verify failed on 6127496331264 wanted 929C1A24 found 33017964 level 129

2020-12-28T13:09:35+11:00 Schrodinger kernel: [26029.904297] BTRFS warning (device dm-1): dm-1 checksum verify failed on 6127496331264 wanted 929C1A24 found 33017964 level 129

2020-12-28T13:09:35+11:00 Schrodinger kernel: [26029.917033] BTRFS error (device dm-1): BTRFS: dm-1 failed to repair btree csum error on 6127496331264, mirror = 1

A clue

Looking through the logs, you can see these errors were logged at 1:09 PM on the 28th of December, 4 days before the reported failure.

Then it struck me, I updated the NAS before I went away! I didn’t think of it as I did it at 6 in the morning and it was days before the failure!

Sure enough, scrolling back through both the logs I can see the update applied 7 hours before BTRFS corruption was reported!

2020-12-28T05:51:31+11:00 Schrodinger updater: updater.c:6331 Start of the updater...

2020-12-28T05:51:33+11:00 Schrodinger updater: updater.c:6602 ==== Start flash update ====

2020-12-28T05:51:33+11:00 Schrodinger updater: updater.c:6606 This is X86 platform

2020-12-28T05:51:33+11:00 Schrodinger updater: updater.c:6623 The SynoBoot partitions exist.

2020-12-28T05:51:33+11:00 Schrodinger updater: updater.c:3878 SYNORedBootUpdCheckAndApply(3878): Skip bootloader update, no uboot_do_upd.sh exists

2020-12-28T05:51:34+11:00 Schrodinger updater: updater.c:615 file [zImage] is being copied to [/tmp/bootmnt]

2020-12-28T05:51:34+11:00 Schrodinger updater: updater.c:623 file [/tmp/bootmnt/zImage] process done.

2020-12-28T05:51:34+11:00 Schrodinger updater: updater.c:615 file [rd.gz] is being copied to [/tmp/bootmnt]

2020-12-28T05:51:34+11:00 Schrodinger updater: updater.c:623 file [/tmp/bootmnt/rd.gz] process done.

2020-12-28T05:51:34+11:00 Schrodinger updater: updater.c:615 file [grub_cksum.syno] is being copied to [/tmp/bootmnt]

2020-12-28T05:51:34+11:00 Schrodinger updater: updater.c:623 file [/tmp/bootmnt/grub_cksum.syno] process done.

2020-12-28T05:51:34+11:00 Schrodinger updater: updater.c:606 file [/tmp/bootmnt/updater] is being removed

2020-12-28T05:51:34+11:00 Schrodinger updater: updater.c:623 file [/tmp/bootmnt/updater] process done.

2020-12-28T05:51:34+11:00 Schrodinger updater: updater.c:606 file [/tmp/bootmnt/VERSION] is being removed

2020-12-28T05:51:34+11:00 Schrodinger updater: updater.c:623 file [/tmp/bootmnt/VERSION] process done.

2020-12-28T05:51:34+11:00 Schrodinger updater: updater.c:615 file [model.dtb] is being copied to [/tmp/bootmnt]

2020-12-28T05:51:34+11:00 Schrodinger updater: updater.c:623 file [/tmp/bootmnt/model.dtb] process done.

2020-12-28T05:51:46+11:00 Schrodinger updater: updater.c:1065 This model DS920+

needs no factory upgrade

2020-12-28T05:51:49+11:00 Schrodinger updater: updater.c:6991 ==== Finish flash update ====

2020-12-28T05:51:49+11:00 Schrodinger updater: updater.c:5166 successfully backup image for System Migration in path (/tmpRoot/.syno/patch).

2020-12-28T05:51:49+11:00 Schrodinger updater: updater.c:7028 ==== Finish updater post-action ====

2020-12-28T05:51:49+11:00 Schrodinger updater: updater.c:7082 Congratulations!! The update has been completed!! Do configupdate when next bootup.

2020-12-28T05:53:34+11:00 Schrodinger synoscgi_SYNO.Core.System_1_reboot[11275]: system_sys_init.c:95 synopoweroff: System is going to reboot

--lots of stuff removed--

2020-12-28T05:57:55+11:00 Schrodinger root: == DSM 6.2 25426-3 finished boot up ==

I also noticed this in the logs, looking at previous boots it appears normal.

2020-12-28T05:56:24+11:00 Schrodinger kernel: [ 36.590885] md: invalid raid superblock magic on sata3p5

2020-12-28T05:56:24+11:00 Schrodinger kernel: [ 36.596827] md: sata3p5 does not have a valid v0.90 superblock, not importing!

2020-12-28T05:56:24+11:00 Schrodinger kernel: [ 36.673858] md: invalid raid superblock magic on sata4p5

2020-12-28T05:56:24+11:00 Schrodinger kernel: [ 36.679798] md: sata4p5 does not have a valid v0.90 superblock, not importing!

2020-12-28T05:56:24+11:00 Schrodinger kernel: [ 36.749370] md: invalid raid superblock magic on sata1p5

2020-12-28T05:56:24+11:00 Schrodinger kernel: [ 36.760755] md: sata1p5 does not have a valid v0.90 superblock, not importing!

2020-12-28T05:56:24+11:00 Schrodinger kernel: [ 36.840809] md: invalid raid superblock magic on sata2p5

2020-12-28T05:56:24+11:00 Schrodinger kernel: [ 36.846754] md: sata2p5 does not have a valid v0.90 superblock, not importing!

2020-12-28T05:56:24+11:00 Schrodinger kernel: [ 36.871236] md: sata3p2 has different UUID to sata3p1

2020-12-28T05:56:24+11:00 Schrodinger kernel: [ 36.880785] md: sata4p2 has different UUID to sata3p1

2020-12-28T05:56:24+11:00 Schrodinger kernel: [ 36.890330] md: sata1p2 has different UUID to sata3p1

2020-12-28T05:56:24+11:00 Schrodinger kernel: [ 36.899873] md: sata2p2 has different UUID to sata3p1

Pulling the files off

Whilst we are pulling the files off, I ended up with screens and screens of this

[15606.366252] BTRFS info (device dm-1): no csum found for inode 421 start 34418688

[15974.772335] BTRFS warning (device dm-1): dm-1 checksum verify failed on 6228931624960 wanted 0 found E4E3BDB6 level 0

Eventually, the checksum errors turned up in the UI..

The fix was to reboot the NAS and copy the files again.

So what was it?

It’s been a while since the incident so I don’t have the screenshots and logs. but as best as I could tell, the issue was that I manually paused disk scrubbing a long time ago and then performed a DSM update.

Whatever was in that update clearly didn’t like the long backlog of the BTRFS scrubbing and corrupted the file system.

Lesson learned, don’t pause data scrubbing and forget about it.

Pingback: Replacing my Windows Storage server, with a NAS? - UcMadScientist.com

First off thanks for your extended troubleshooting description. Given my experience, Synology blaming the usage of 3rd party RAM modules is a cheap excuse. I googled this problem and everyone was given the same explanation. I guess this only happens to somewhat heavy DSM users, which means they have probalbbly already expanded their RAM with reasonably prized alternatives to Synology rebranded modules.

In my case my DS1821+ had already frozen up twice in the last month. While it responded to ping and the power led did start blinking after pressing the power button for a few seconds, nothing else responded, and the system hadn’t shut down after more than 4 hours of blinking.

I guessed it might be due to low RAM capacity (still had the original 4GB) although I never saw high RAM usage. Still I bought alternative compatible 2x 16GB from Amazon as Synology rebranded RAM is ridiculously expensive.

The day I received these modules I logged into DSM to shut it down and do the upgrade, but found the volume had entered read-only status about one and a half days ago. On system log I found several services had crashed due to insufficient RAM. However, current RAM usage was very low.

Having had HDD failures several times since I first started using Synology NAS about 10 years ago (my first one was a used DS211j), I’ve always had scheduled SMART testing on all my NAS, with daily fast tests and weekly extended tests. This year I added scheduled data scrubbing, which must have taken RAM usage to the limit. I also have both my NASes with SHR-2 redundancy and BTRFS file system. On top of that, I hyperbackup this NAS to my DS1618+ and vice versa (they are on different locations). This means I wasn’t too stressed out of losing my data and I was willing to try a reboot before hearing back from Synology support, as I’m mainly using this NAS for Surveillance Station (plus hyper and active backup).

I wasn’t able to take the read-only volume back to normal. I looked into Log Center and there were about 20 services that had stopped due to insufficient RAM, althought currently it was using about 46%. So I’m guessing this is why the option to bring the Volume back to normal wasn’t doing anything at all, not even messaging me that it wasn’t able to do so. Researching this problem on google I heeded the warning to download logs prior to rebooting the system and sent it to Synology Support.

I also coudn’t start hyperbackup to force a backup update, I’m guessing also because of some needed service that had stopped. I sent the log to Synology Support but grew impatient waiting for a response.

I wanted to follow your troubleshooting but I coudn’t ssh into the NAS. I tried to open Control Panel to see if I needed to enable SSH, but it wouldn’t load. I’m guessing the underlying services had also crashed.

Since the crytical and important folders were hyperbacked up (except the 12 camera recordings on Surveilance Station), I took the chance and rebooted the system. DSM was taking forever to log in. When DSM desktop finally appeared, there were no icons and I wasn’t able to load anything, and clicking on the Main Menu icon didn’t show anything also. I waited about 30 minutes and still no icons were loaded. I hard rebooted the system again (pressing power icon for 10 seconds).

On this second reboot the system started normally and I got 2 messasges stating the read-only Volume auto-repaired itself, without my intervention. I then turned the system off and replaced the original 4GB module with the two new alternative 16GB modules. DSM has been running flawlessly ever since.

A few days later I got the same response from Synology Support many other users with this problem have gotten, except for the part that their alternative RAM is probabbly the source of the issue, since I still had the original 4GB RAM module when my volume went into read-only mode. Most of the response was clearly a copy/paste answer since they completely ignored my report of using SHR-2, healthy fast and extended SMART tests results, and BTRFS and hyperbackup usage.

Since then I have lowered datascrubbing schedule to once a month on both my NASes as a precaution and activated Active Insight.